Learnings from a stint in the robotics space

Fresh off a shipped game and developing a web backend for a crypto exchange, I entered employment in an industry that could use my skills in developing simulations. The robotics space stretches across a number of fields, touching specialized hardware, and using software to simulate the physics. I would regard it as amongst the tougher fields to get into.

Software in the Robotics Industry

Software in the robotics industry has moved on to use open-source software packages called Robot Operating System(ROS). Simply put, you install these software packages in your robots and run them to get them to do what you want. They use a pub-sub model and all the connectivity is handled behind the scenes, all you have to do to send commands to your robots is enter a command via the command line.

There is a simulator called Gazebo, based off the Ogre Graphics engine. It is similar to a game engine but has an interface to ROS and more tools for simulating your environment. It’s main use for roboticists is to correct their robot’s code as much as possible in a simulated environment before being deployed in the real world. Unfortunately, it is fairly dated and while usable, it lacks polish compared to commercial grade game engines due to it’s age. There is another simulator called Ignition that is in development, but don’t expect it to reach parity soon.

On Hardware

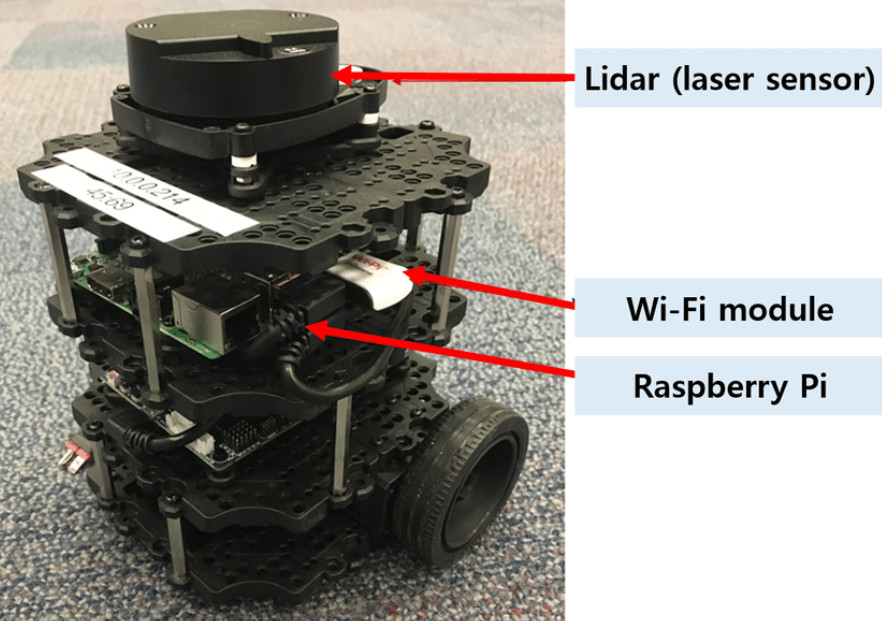

The first robot I deployed software on (through my company’s good graces) was a small TurtleBot. It’s a small, modular, and customizable 2-wheeled robot. You can plug in a usb, ssh into it’s operating system and install packages there. One particularly interesting feature is that it seemed it was made from a number of raspberry pi circuit boards stacked together. The essential parts of the robot can be categorized to sensors, motors(for moving wheels,etc), and controller boards, and each type has it’s own specifications and detail.

A small Turtlebot with 3 components

A small Turtlebot with 3 componentsWhile you are likely able to purchase commonly used hardware parts online, if you need customized robot hardware parts, it can take really long to make and ship, like a few months. This can make the iteration times on hardware really long.

The insights gained

When I got the job, I spent a couple of hours per day, on and off trying to get through a robotics textbook as part of my efforts to integrate into the industry. For starters, I was glad to see linear algebra as one of the topics which I already knew from my time in game development, but the real tough, meaty part was getting into advanced math combined with algorithms.

You start off with their concepts of motion, called screws, twists, wrenches, then learn about constraints and jacobians, lagrangians along the way. There are topics on Forward Kinematics (find orientation/positions of end-effector given joint angles) and Inverse Kinematics (find joint angles given orientations/positions of end-effector) problems.

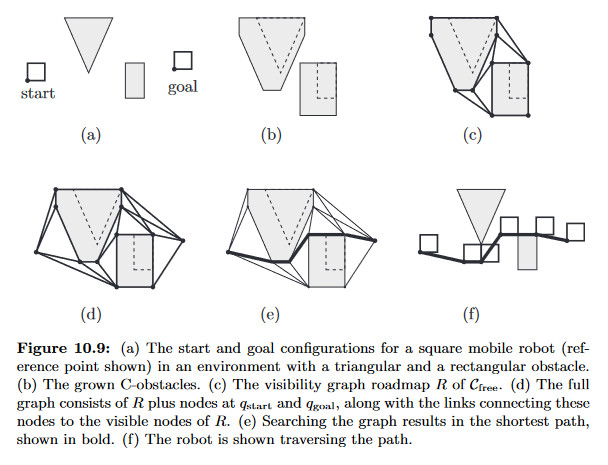

Motion Planning

If you want your robot to do something in the physical world, it needs to plan for how to move. There are whole topics on visibility graph construction, a-star planning and search algorithms here, nothing that a CS grad can’t handle. But before you toss it off, it is worth learning the novel ways they reuse these familiar algorithms. For example, if you need your multi-joint robot to do planning, you can fit all possible coordinate spaces for the rotation of each joint into a graph, then discretize the graph, brute force each cell for viability and perform A-star on it!

The physical connection

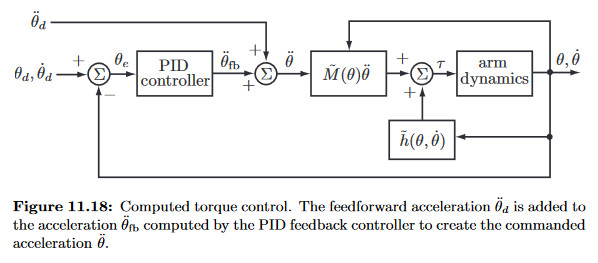

For starters, let’s look at how joints move. A joint’s rotational movement is powered by a motor, but in physics terms it is torque. A motor needs power as input to produce torque, and it’s software controller needs to compute how much power is needed to reach a certain torque.

Once that’s done, we need a sensor to tell us how close we are to the target. We can’t quite have a sensor that calculates physical spaces, but fortunately our motor has it’s own sensors. In turn, we receive the error amount and feedback to the controller to see how much torque is to be produced for the next interval.

This whole system is called the control law. It’s a block diagram of inputs, outputs and error feedback. There are whole equations in it, involving interesting terms like Proportional-Integral-Derivative (PID) controller.

Achieving autonomous navigation for a robot

A major problem that’s being worked on in the field is robot autonomy. In this indoor version of this problem, the robot has ‘discover’ it’s indoor surroundings while being able to plan and navigate through it safely. It also has to figure out where it is in the world through it’s sensors, a process called localization. The whole process is called Simultaneous Localization and Mapping (SLAM).

It goes to say that the better the data your sensors give you, the better your algorithms work. There will be a lot of rubbish data and algorithmic filters like the Kalman filter or the particle filter will weed through some of it. I am unsure on this, but I heard nowadays most sensors have their own filters implemented within the hardware using FGPAs for the computation. It also seems under the perview of mechanical engineers.

Wrapping it up

There’s no doubt that this is amongst the tougher subjects a math-inclined person can take on. The math already takes up a whole bunch of headspace and effort, it’s no wonder many roboticists I encountered have a masters degree or higher. The iteration speeds are not the best either, and neither is making mistakes with the robot specifications. It’s also likely that new, higher quality simulators from other industries will enter the space and the simulator iteration situation will improve over time. Meanwhile, we’ll have to wait and see.